WASHINGTON, DC - After the proliferation of videos and soundbites circulating on social media worldwide, Congressional members are taking a stand against AI. Several members of Congress, including U.S. Senator Marsha Blackburn from Tennessee, are working to protect their likenesses in voice, picture, and video.

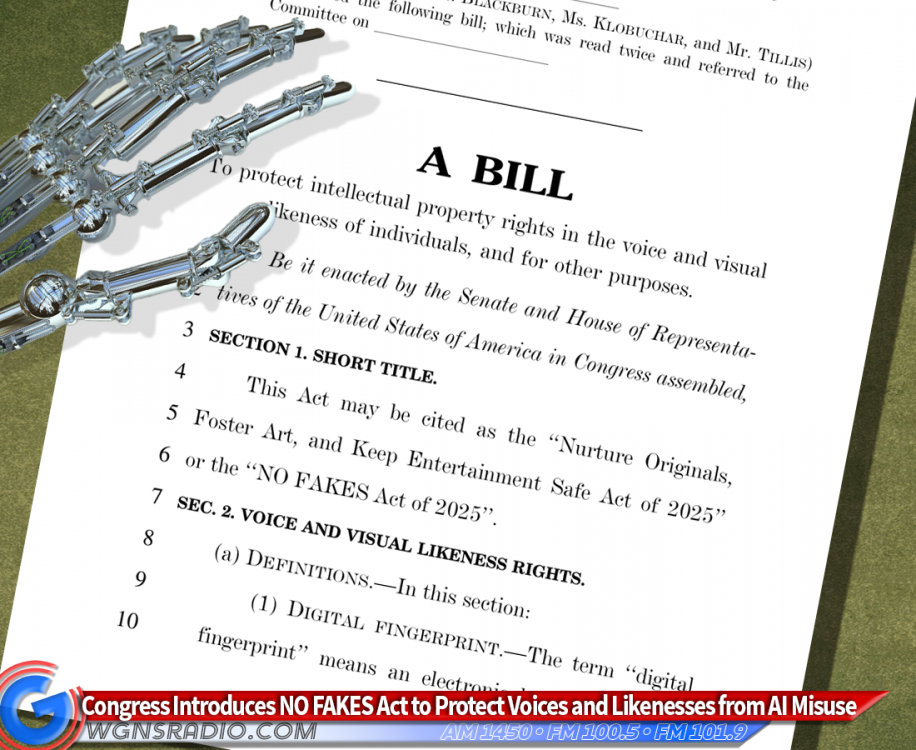

Today, U.S. Senators Marsha Blackburn (R-Tenn.), Chris Coons (D-Del.), Thom Tillis (R-N.C.), and Amy Klobuchar (D-Minn.), along with U.S. Representatives Maria Salazar (R-Fla.) and Madeleine Dean (D-Penn.), introduced the bipartisan Nurture Originals, Foster Art, and Keep Entertainment Safe (NO FAKES) Act. The bill aims to protect the voice and visual likenesses of individuals and creators from the proliferation of digital replicas created without their consent.

“While AI has opened the door to countless innovations, it has also exposed creators and other vulnerable individuals to online harms,” said Senator Blackburn. “Tennessee’s creative community is recognized around the globe, and the NO FAKES Act would help protect these individuals from the misuse and abuse of generative AI by holding those responsible for deepfake content to account.”

“Nobody—whether they’re Tom Hanks or an 8th grader just trying to be a kid—should worry about someone stealing their voice and likeness,” said Senator Coons. “Incredible technology like AI can help us push the limits of human creativity, but only if we protect Americans from those who would use it to harm our communities. I am grateful for the bipartisan partnership of Senators Blackburn, Klobuchar, and Tillis, the support of colleagues in the House, and the endorsements of leaders in the entertainment industry, the labor community, and firms at the cutting edge of AI technology.”

“While AI presents extraordinary opportunities for technological advancement, it also poses some new problems, including the unauthorized replication of the voice and visual likeness of individuals, such as artists,” said Senator Tillis. “We must protect against such misuse, and I’m proud to co-introduce this bipartisan legislation to create safeguards from AI, which will result in greater protections for individuals and that which defines them.”

“Americans from all walks of life are increasingly seeing AI being used to create deepfakes in ads, images, music, and videos without their consent,” said Senator Klobuchar. “We need our laws to be as sophisticated as this quickly advancing technology. The bipartisan NO FAKES Act will establish rules of the road to protect people from having their voice and likeness replicated through AI without their permission.”

“In this new era of AI, we need real laws to protect real people,” said Representative Salazar. “The NO FAKES Act is simple and sacred: you own your identity—not Big Tech, not scammers, not algorithms. Deepfakes are digital lies that ruin real lives, and it’s time to fight back.”

“As AI’s prevalence grows, federal law must catch up—we must support technological innovation while preserving the privacy, safety, and dignity of all Americans,” said Representative Dean. “By granting everyone a clear, federal right to control digital replicas of their own voice and likeness, the NO FAKES Act will empower victims of deepfakes, safeguard human creativity and artistic expression, and defend against sexually explicit deepfakes. I’m grateful to work with a bipartisan group of colleagues on common sense, common ground regulations of this new frontier of AI.”

The NO FAKES Act would address the use of non-consensual digital replications in audiovisual works or sound recordings by:

-

Holding individuals or companies liable if they distribute an unauthorized digital replica of an individual’s voice or visual likeness;

-

Holding platforms liable for hosting an unauthorized digital replica if the platform has knowledge of the fact that the replica was not authorized by the individual depicted;

-

Excluding certain digital replicas from coverage based on recognized First Amendment protections;

-

Preempting future state laws regulating digital replicas.